Overview

- What's in a NAME?

- A schematic overview

- Particles and sub-grid-scale (Lagrangian) motion

- (Eulerian) Chemistry

- Parallelism:

- Existing threaded parallelism (particle decomposition)

- Distributed particle decomposition

- Distributed domain decomposition

- Development/Test Strategy

- A "staged de-replication"

- Results

- Volcanic Ash

- Chemistry

- Greenhouse Gas

- The future...

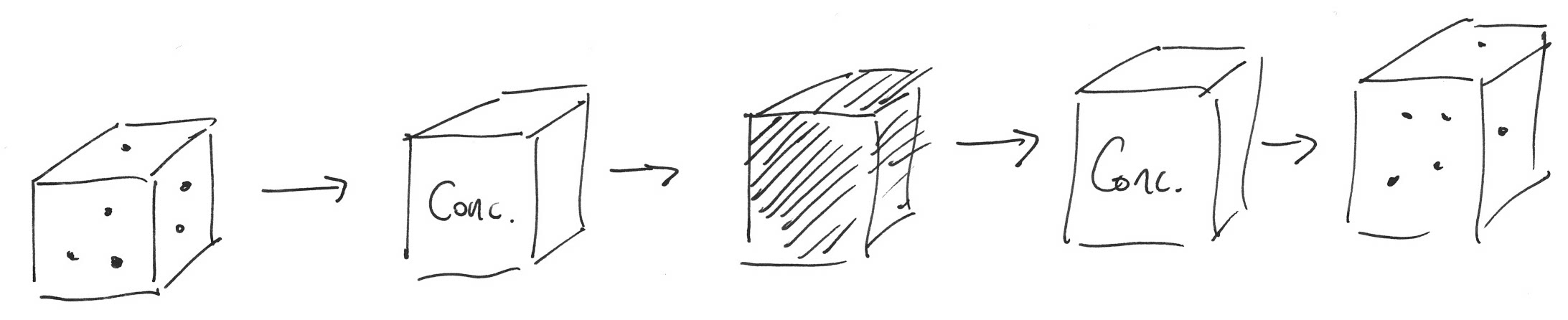

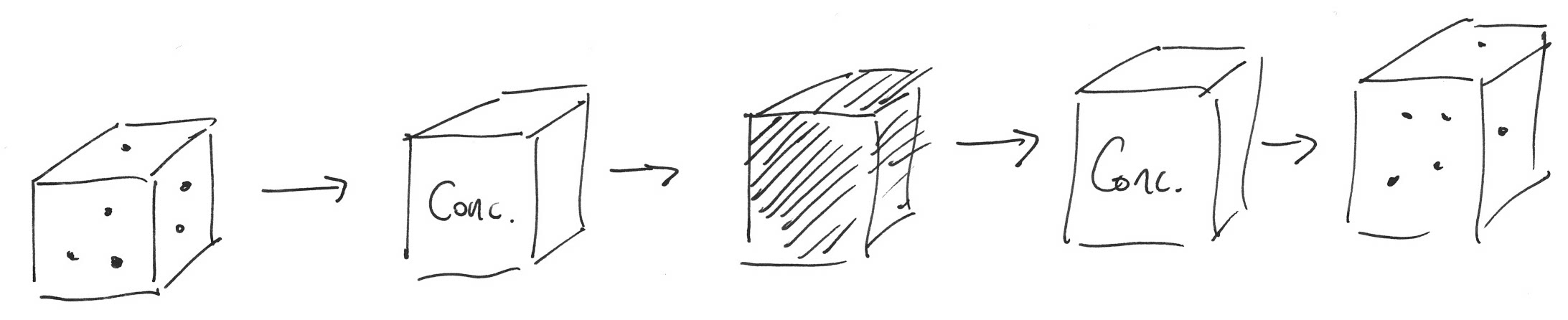

Schematically...

Mixed Lagrangian...

- Particle motion

- Advected by background wind field

- Other processes including convection/plume effects

- Random motion to represent turbulent scales not resolved

- Statistics

- Accuracy requires sufficient number of (pseudo-)particles

- Higher resolution requires more particles

...Eulerian

- Particles

- Each carry a payload of chemical constituents

- Require a sum to give a concentration at given location

- Chemistry

- A "black box" which is entirely local to a single Eulerian cell

- Concentrations must be "re-distributed" to particles

- A separate Eulerian advection facility is not considered here

Thread parallelism

Source generates particles

Update particles...

!$omp parallel do

do n = 1, nParticles

call particleUpdateMe(n, metData, ...)

end do

Particle data structure

type (Particle_)

integer (int64) :: iUP ! unique identifier

real (pos_t) :: x(3) ! position

integer (int32) :: iCoord ! coordinate system

integer (int64) :: rng(2) ! RNG state

...

type (Extra_), pointer :: extra ! optional extra data

double (real64) :: mass(:) ! chemical species

end type Particle_

Memory requirement

Particle_ ! c. 128 bytes

Extra_ ! c. 400 bytes (if required)

mass(:) ! typically 42 species (if required)

Output

Particles may contribute to output...

Distributed particle decomposition

A copy of the source generates all particles...

Particles assigned to MPI task based on unique index iUP

Update particles...

do n = 1, nParticlesLocal

call particleUpdateMe(n, metData, ...)

end do

Greenhouse Gas Benchmark

- UK plus surrounding region (backwards in time)

- NWP data: 1.5km, 57 vertical levels at 1 hour

- Original 480,000 particles (c. 30 minutes 1 node); 48 million < 4 hours

Output (again)

Particles may contribute to output...

- Solution

- Each task forms its own contribution to (replicated) output

- Communicate to add contributions

- Single MPI task performs actual output to file

Volcanic Ash Benchmark

- Askja (Iceland)

- NWP data: global UM at 3 hours

- Smaller: 750,000 particles; Larger: 7,500,000 particles

Chemistry

- Problem

- Chemistry performed on an Eulerian grid, so ...

- All particles in a given Eulerian cell must be present on same MPI task

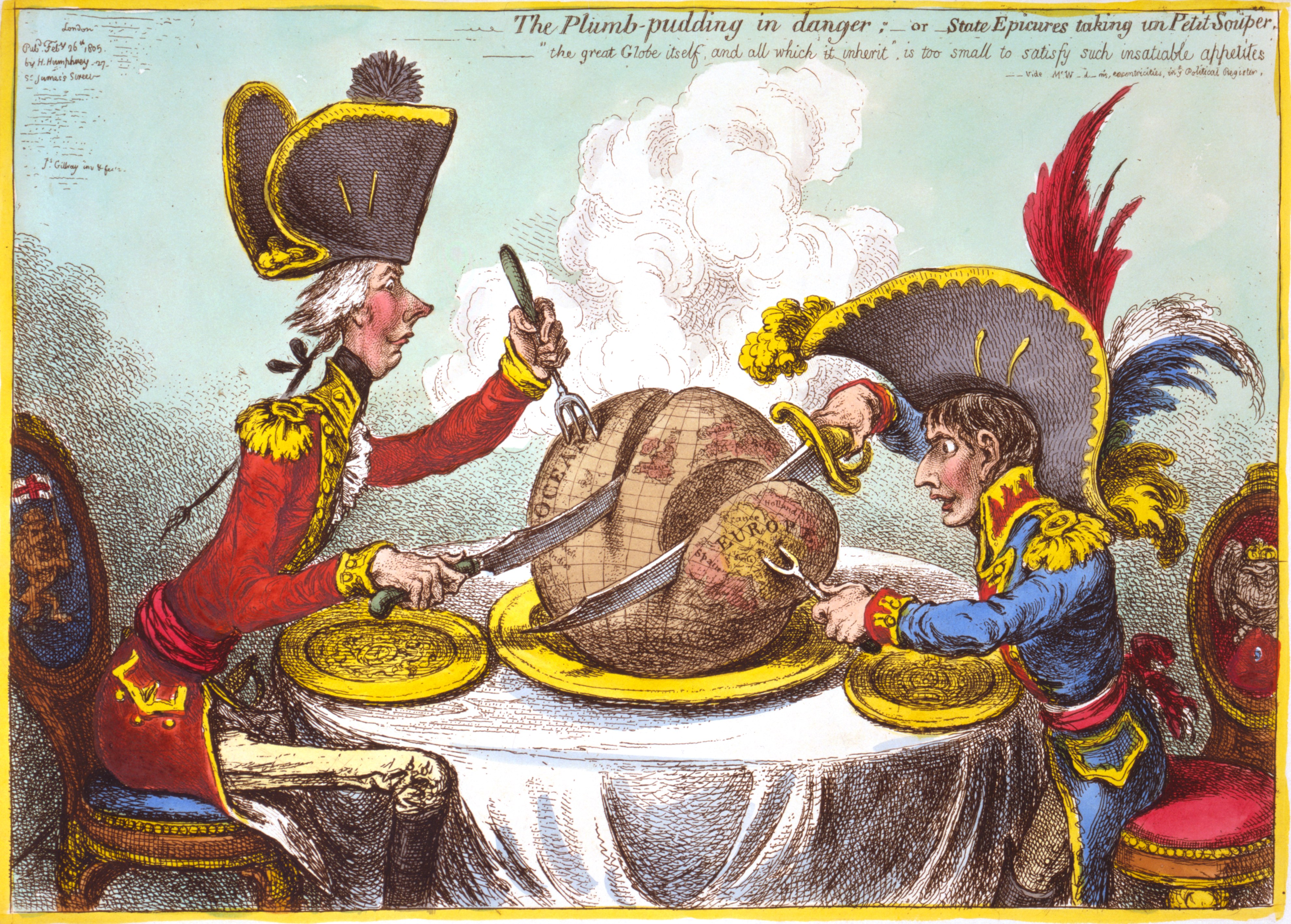

Reference: https://upload.wikimedia.org/wikipedia/commons/9/97/Caricature_gillray_plumpudding.jpg

Some machinery

- Particle memory management

- Complete re-write

- Separate from application concerns

- MPI data types

- For

Particle_structure - Also some components therein

- Used to form messages

- Patches

- Auxiliary list of particles for decomposition

- Helps to sort particles in horizontal and vertical

- (Uses Peano-Hilbert order in horizontal for potential load balance)

Recall...

Domain decomposition

A copy of the source generates all particles...

! Based on initial particle position (x,y,z)

if (isEulerian) then

! Assign to horizontal patch based on (x,y)

else

! Don't care...

! Assign to horizontal patch based on iUP

end if

Particle movement ...

- In fact:

- Group all particles crossing a particular boundary into single message

Chemistry parallelisation

- Eulerian concentrations

- Storage remains replicated

- Work (domain-) distributed

- Threaded parallelisation

- Implementation completely rewritten

- Based on patch structure (e.g., sorting in vertical)

- Scheduling/synchonisation issues removed (identified via profile)

- Updated concentrations

- Gather to rank 0 for output

- Eulerian physical processes/advection not considered

Chemistry Benchmark

- UK plus north-west Europe (Eulerian grid at c. 10km)

- NWP data: c. 0.5$^\circ$ in horizontal, 50 vertical levels at 4 hours

- STOCHEM with 42 species

Development

Strategy

- "Staged de-replication"

- Refactored output/errors (everything else replicated)

- Run multiple redundant copies (single output)

Reproducability

- Code gives same result independent of threads/tasks

- But not the same result as previous versions ...

- Random number generator initialisation per particle

- Serial algorithms replaced by parallel algorithms

Testing

No tests exsited in original (!)

- A number of levels introduced

- Assertions to enforce design-by-contract

- Unit tests for new functionality

- A number of standard (performance) benchmarks for repeatability

- Quality

- No warnings with

gfortran -Wall(new/refactored code) - Portability: GNU, NAG, Intel, Cray, (Windows?)

- Review by Met Office before introduction in trunk

Assertions

pure function patchXYIndex(patches, iXPH, iYPH) result(iPatchGlobal)

! Map patch (iXPH, iYPH) to one-dimensional patch index.

type (Patches_), intent(in) :: patches

integer, intent(in) :: iXPH

integer, intent(in) :: iYPH

integer :: iPatchGlobal

assert(1 <= iXPH .and. iXPH <= patches%nxPH)

assert(1 <= iYPH .and. iYPH <= patches%nyPH)

iPatchGlobal = peanoHilbert2DTo1D(patches%nPH, iXPH, iYPH)

assert(1 <= iPatchGlobal .and. iPatchGlobal <= patches%nGlobal)

end function patchXYIndex

Implementation via pre-processor macro

Unit tests

Stand-alone unit tests run for new functionality (MPI/threads)

- Coverage assessed via

gcov - e.g.,

gfortran --covergage -

lcovproduces a test report

Summary

Aim of the work:

- Produce a functional distributed memory version

- More memory allows more particles:

- Allow increased size/resolution while maintaining time-to-solution

- Maintain statistical accuracy

Exposed a number of bottlenecks:

- Output

- ASCII could be replaced by netCDF

- Release of particles

- Requires a parallel implementation

- Reading large NWP meteorological data sets

- Improved threaded parallelism should help

Credits

At UK Met Office

- Dave Thomson (Science lead)

- Ben Devenish (Benchmarks/Volcanos)

- Andrew Jones (Software/tests)

- Ben Evans (Project lead)

Credits

Photo: Arni Frioriksson

https://commons.wikimedia.org/wiki/File:Eyjafjallajokull-April-17.JPG

Improving performance of Met Office NAME code

Kevin Stratford (Proposal: IP07)

- Background

- Run operationally and for research

- Based on particles

- What's needed

- Fortran / OpenMP

- Running tests and benchmarks / assessing performance

Improving performance of Met Office NAME code

type (Particle_)

integer (int64) :: iUP

real (pos_t) :: x(3)

integer (int32) :: iCoord

.

.

.

end type Particle_

type (ParticleArray_)

integer (int64) :: iUP(:)

real (pos_t) :: x(3,:)

integer (int32) :: iCoord(:)

.

.

.

end type ParticleArray_

- What's in it for me?

- Experience working on a real code

- Met Office are engaged and interested in the work

Performance portability ...

Kevin Stratford (Proposal: EP28)

- Problem

- Want to run on both CPU and GPU

- Can we do it without writing two versions of the source code?

- Solution

- Need abstraction of the thread model / memory layout

- Various ways are available... (we have "targetDP")

... simulation of particle suspensions

Kevin Stratford (Proposal: EP28)

- CFD code "Ludwig"

- Some areas of code abstracted

- Want to extend to full coverage of moving particles

- Skills

- Must be happy with C / pointers / data structures

- OpenMP / GPU knowledge a plus

- What's in it for me?

- A key, growth, area in scientific computing

- Practical experience with world-class research code