Parallel Programming

© iStock.com/Photokanok

How to parallelise the Income Calculation example?

Consider how to parallelise the salaries example using the shared-variables model, i.e. how could 4 office mates add up the numbers correctly on a shared whiteboard?

Remember that you are not allowed to talk directly to your office mates - all communications must take place via the whiteboard. Things to consider include:

how do you decompose the calculation evenly between workers?

how do you minimise communication between workers?

how do you ensure that you do not have any race conditions?

Can you think of any other aspects that should be taken into account?

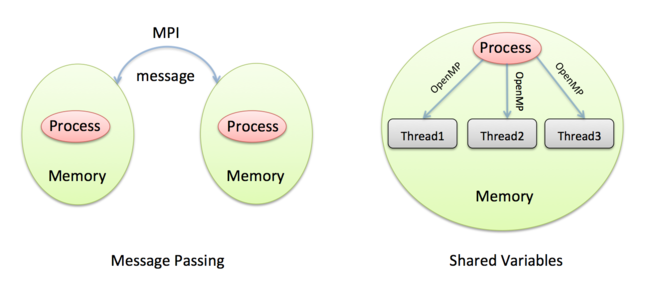

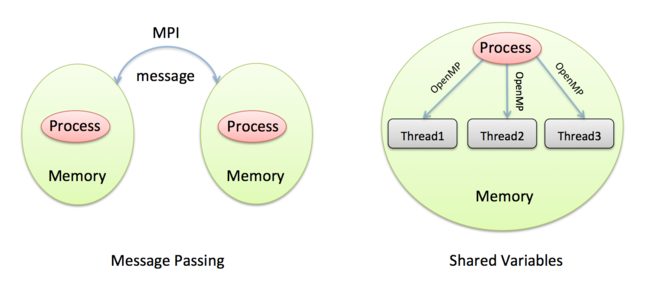

Message-Passing Model

The Message-Passing Model is closely associated with the distributed-memory architecture of parallel computers.

Remember that a distributed-memory computer is effectively a collection of separate computers, each called a node, connected by some network cables. It is not possible for one node to directly read or write to the memory of another node, so there is no concept of shared memory. Using the office analogy, each worker is in a separate office with their own personal whiteboard that no-one else can see. In this sense, all variables in the Message-Passing Model are private - there are no shared variables.

In this model, the only way to communicate information with another worker is to send data over the network. We say that workers are passing messages between each other, where the message contains the data that is to be transferred (e.g. the values of some variables). A very good analogy is making a phone call.

The fundamental points of message passing are:

the sender decides what data to communicate and sends it to a specific destination (i.e. you make a phone call to another office);

the data is only fully communicated after the destination worker decides to receive the data (i.e. the worker in the other office picks up the phone);

there are no time-outs: if a worker decides they need to receive data, they wait by the phone for it to ring; if it never rings, they wait forever!

The message-passing model requires participation at both ends: for it to be successful, the sender has to actively send the message and the receiver has to actively receive it. It is very easy to get this wrong and write a program where the sends and receives do not match up properly, resulting in someone waiting for a phone call that never arrives. This situation is called deadlock and typically results in your program grinding to a halt.

In this model, each worker is called a process rather than a thread as it is in shared-variables, and each worker is given a number to uniquely identify it.

Things to consider

When parallelising a calculation in the message-passing model, the most important questions are:

how are the variables (e.g. the old and new roads) divided up among workers?

when do workers need to send messages to each other?

how do we minimise the number of messages that are sent?

Because there are no shared variables (i.e. no shared whiteboard), you do not usually have to consider how the work is divided up. Since workers can only see the data on their own whiteboards, the distribution of the work is normally determined automatically from the distribution of the data: you work on the variables you have in front of you on your whiteboard.

To communicate a lot of data we can send one big message or lots of small ones, what do you think is more efficient? Why?

© iStock.com/adrian825

How to parallelise the traffic simulation?

Consider how you could parallelise the traffic model among 4 workers, each with their own whiteboards in separate offices, communicating by making phone calls to each other.

Remember that the cars are on a roundabout (we are using periodic boundary conditions) so cars leaving the end of the road reappear at the start.

To get you started:

think carefully about how the traffic model works; what are its basic rules?

think about the characteristics of the message-passing model;

how can you combine them?

which workers need to phone each other, when and how often?

You do not need to provide a clear-cut answer. Instead, list the things that you think need to be considered and why.

Extra Exercises

In fact, sending a message can be implemented in two different ways:

like making a phone call (synchronously) or

like sending an email (asynchronously).

The difference is whether the sender waits until the receiver is actively taking part (a phone call) or carries on with their own work regardless (sending an email).

Do you think that solving the traffic model in parallel is simpler using synchronous or asynchronous messages? Which do you think might be faster? Do you think the boundary conditions are important here?

Imagine that you want all workers to know the average speed of the cars at every iteration. How could you achieve this using as few phone calls as possible?

Solution to Traffic simulation in Message-Passing

Transcript

0:11 - Now we’re going to look at the traffic simulation but imagine how we could operate it in parallel. So I’ve got an even bigger board here, a bigger road. I’ve got three Chess boards stuck together. And so I have a road of length 24. And I have cars all over the road. So if we were operating on a shared memory computer in the shared-variables model, it wouldn’t really be a problem in running this simulation in parallel. This would be our very large, shared whiteboard, and there would be lots of workers together in the same office– all able to read and write to the whiteboard.

0:41 - So for example, we could just decide if there were two workers that one worker updated all of these pawns, and the other worker updated all of these pawns, there might have to be some interaction, some collaboration between us to make sure that we are always on the same iteration. That we didn’t run ahead of each other. But in principle, there’s no real problem here. However, what’s much more interesting for this simulation is to look at how you parallelise it in distributed memory using the message-passing model. So what happens there, is we’re going to imagine this is split up over three people, three workers, but they’re in three separate offices.

1:13 - So these are three separate whiteboards, each in a different office, and I’m operating on this small whiteboard here. All I can see is my own whiteboard. I can’t see what’s going on in these other two offices.

1:33 - Now in the message-passing parallelisation I only have access to my own small whiteboard. And so now we can see there’s immediately a problem. For example, I can update this pawn. I know he can’t move. That one can’t move. That one can move. And that one can. But I have a problem at the edges. I have two problems. One is, I don’t know if this pawn can move, because I don’t know what’s happening over here. This is the piece of board which is owned by the person upstream from me.

2:00 - And also, I don’t know if I should place a new pawn in this cell here, because that depends on the state of the board owned by the person who’s downstream from me. So the only way to solve this is to introduce communication. And in the message-passing model, communication is done through passing messages. And one analogy is making phone calls. So what I need to do, I need to pick up the phone and I need to phone my neighbours both to the left and to the right. I need to phone them up and say, OK, what’s going on here? What pawns do you have in your cell here? And I’ll tell my neighbour what’s going on here.

2:37 - And then I need to make another phone call upstream to ask the person, what’s going on in your cells there? And to tell them what’s going on in my edge cells. Having communicated with my fellow workers, I’m now in a situation where I can update the simulation on my piece of board, because I know what’s going on on the edges. So for example, I know if there’s a pawn who needs to move into this square here. Or I know if there’s a gap here and this pawn can move off. So I can then update all my pawns on my board. And then on the next iteration, I have to again communicate with my fellow workers.

3:08 - I need to communicate with my neighbours to the left and to the right to find out what the new stage of the pawns on the edges of their boards are. So the whole simulation continues in this process of communication and then calculation. Communication to find out what’s going on with your fellow workers. And then calculation, when you locally update your own chess board. There’s one extra thing we need to do this simulation, which is work out the average speed of the cars. So let’s take a situation, for example, where this pawn can move and there is no pawn coming in here. So we’ll say, OK, this pawn can’t move. This pawn moves, that’s one move. That makes two moves.

3:44 - And this one, that’s three moves. So I know that three pawns have moved. But to calculate the average speed of the cars, I need to know how many pawns have moved on the entire road when in fact, I can only see a small section of the road. So not only do we need to communication with our fellow workers to do a single update to perform a single iteration, to find out what’s going on the edges of our board, we also need to communicate with them to work out what the average speed is, to work out how many pawns have moved on their board.

4:10 - So whenever I want to work out what the average speed is, I have to pick up the phone and phone all of my fellow workers– that’s the simple way of doing it. Asking them how many of their pawns have moved. And then I get the totals and I can add them all together. So you can see that not only does updating the simulation require communication, even simple calculations like how many pawns have moved requires communication. Because I only know how many pawns have moved on my piece of road, but not what’s happening on the other pieces of road which are on other people’s whiteboards in other offices.

In this video David describes the basics of how you can parallelise the traffic model using message passing, i.e. on a distributed-memory machine.

Try to list the most important points of this parallelisation. Was there anything that you failed to consider when coming up with your answer? For example, how does each worker know whether it’s supposed to call or wait for a call? Can you think of any other rules that need to be established for this to work?

Hopefully, you now have a better understanding of how both programming models work and how they differ from each other. In the next two steps we will talk about the actual implementations of both models.

© iStock.com/georgeclerk

MPI and processes

So far we have discussed things at a conceptual level, but it’s worth going into some of the details, particularly so you are familiar with certain terminology such as process, thread, MPI and OpenMP.

Message Passing

The way that message-passing typically works is that you write one computer program, but run many copies of it at the same time. Any supercomputer will have ways to let you spread these programs across the nodes, and we normally ensure we run exactly one copy of the program for every CPU-core so that they can all run at full speed without being swapped out by the operating system. From the OS’s point of view, each program is a separate process and by default they all run completely independently from each other.

For example, if you run a Word Processor and a Spreadsheet Application at the same time, each of these becomes a separate process that can only run on a single CPU-core at a time. In the message-passing model, we exploit the parallel nature of our distributed-memory computer by running many processes. The only real differences from the word processor and spreadsheet example is that every process is a copy of the same program, and that we want our parallel processes to work together and not independently.

Each process only has access to its own memory, totally separate from the others (this is strictly enforced by the OS to ensure that, for example, your Word Processor cannot accidentally overwrite the memory belonging to your Spreadsheet Application!). This way of implementing message-passing is called the Single Program Multiple Data or SPMD approach.

When they want to send messages to each other, the processes call special functions to exchange data. For example, there will be a function to send data and a function to receive data. These functions are not directly part of the program but stored in a separate library which will be pre-installed on the supercomputer (if you are familiar with the way that applications are created from source code, this means that the compiler is not directly involved in the parallelisation).

Almost all modern programs use the Message-Passing Interface library - MPI. Essentially, MPI is a collection of communication functions, that can be called from any user process.

So, to summarise:

the message-passing model is implemented by running many processes at the same time;

each process can only run on a single CPU-core and is allocated its own private memory;

inter-process communication is enabled by using the MPI library and so does not require a special compiler;

this is also called the SPMD approach.

Can you see any problems with the Message-Passing approach if one of the nodes has a hardware failure and crashes? As supercomputers are getting larger does this become a more or less of an issue?

© iStock.com/Westhoff

OpenMP and threads

Terminology Quiz

Question 1

What does the term programming model describe?

A) a particular kind of computer simulation

B) a specific computer programming language

C) a high-level view of how to solve a problem using a computer

D) the low-level details of how a particular computer is constructed

Solution

C) - it is concerned with the high-level methods we use to solve a problem, not the low-level details.

Question 2

What is a race condition in the shared-variables model?

A) when a CPU-core is expecting to receive a message but it never arrives

B) when two CPU-cores have different values for some private variable

C) when lack of synchronisation leads to one CPU-core running ahead of the others

D) when two CPU-cores try to modify the same shared variable at the same time

Solution

D) - this can cause erratic results and we need some form of synchronisation to fix it.

Question 3

Which of these could cause deadlock in the message-passing model?

A) a CPU-core asks to receive a message but no message is ever sent to it

B) a CPU-core asks to receive a message from another a few seconds before it is sent

C) a CPU-core sends a message to another a few seconds before it is ready to receive it

D) two CPU-cores try to modify the same shared variable at the same time

Solution

A) - this is like waiting forever for someone to phone you.

Question 4

Which of the following best describes the process of decomposing a calculation?

A) deciding which variables should be private and which should be shared

B) deciding which parts of a calculation can be done independently by different CPU-cores

C) choosing between the shared-variables and message-passing models

D) running a parallel program on a parallel computer

Solution

B) - Decomposing a problem means deciding how to do it in parallel on multiple CPU-cores

Question 5

What is a cellular automaton?

A) a type of computer specifically built to run simple computer simulations

B) a modular system for building parallel computers from simple cellular components

C) a computer simulation technique based on repeated application of simple rules to a grid of cells

D) a parallel programming model

Solution

C) - the traffic model is a good example of a simple cellular automaton